Dr Prasanth V

Editor, JPID

Errors

When we compare study group/s with a control group in a research, there can be ‘errors’. Error is the difference between the ‘fact’ and our ‘finding’. In other words, error is the distortion in ‘population parameter’ when compared to the ‘true value’. Difference between the ‘fact’ and our ‘finding’ can be due to three reasons

Random error

Difference between the ‘fact’ and our ‘finding’ is

purely due to ‘chance’ alone. Random error is non

directional and will average out in repeated samples

(result in some samples will show overestimation and

in some samples show underestimation). Whenever

we study a sample (which we normally do), random

errors will invariably creep in. Random error will

be ‘zero or minimal’ only when we study the entire

population. As sample size increases random error

decreases and hence reliability (precision) of the

research increases.

Reliability (precision) simply means repeatability

of the measurements we make, that is, repeated

researches under the similar conditions give identical

results. Reliability doesn’t guarantee accuracy

(validity) of the measurements though it’s a pre-requirement of validity.

Bias

Any error introduced into the study for which a

cause can be identified is called as bias. Result

of the research will show repeated overestimation

or underestimation indicating a directional and

systematic difference from the true value. Bias is

considered ‘directional’ because the result will not

average out in repeated samples (result in every

sample will be either over or under estimation).

Validity of the study will decrease if the bias is more.

Validity is the ability of the research to measure what

it is intended to measure. A research is valid only if

its result corresponds to the truth.

Bias has nothing to do with the sample size. Strong

study design and adherence to the research protocol

will help to reduce the bias. David L Sacket identified

19 bias and later Bernard Choi extended the list to

65. Even though both words are considered synonyms

there are some non-sampling errors other than

bias. Wrong instrument for measurement, improper

variable definition etc fall in that list.

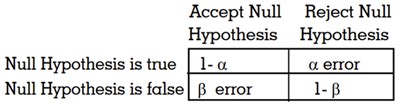

Type 1 (α) and Type II (β) errors

In αerror, a true null hypothesis will be rejected. It

is also called ‘false positive error’ because we have

proved some association which truly is not there.

Normally in medical/dental research, maximum

permissible level of α error is 5% (chance that

observed difference due to chance/sampling error

is less than 5% OR probability of incorrectly rejecting

the null hypothesis is less than/equal to 5%). αlevel

of significance is set by the researcher before the

statistic is computed. Once the null hypothesis is

rejected, the only error possible is ‘α’.

In βerror, a false null hypothesis will be accepted.

It is also called ‘a false negative error’ because our

research did not identify an association which in fact

is existing. Probability of committing Type II is called

βand is usually kept bellow 20%. If null hypothesis is

not rejected, the only error possible is β. Whenever

null hypothesis not rejected, the researcher should

address level of βerror and power. Power (1-β) is

the ability to reduce βerror and it is the probability

that a false null hypothesis is correctly rejected. Non

rejection of null hypothesis can also be because of

low power of the study.